Building an Autonomous Coding Agent with the GitHub Copilot SDK

Build an autonomous coding agent with .NET 10 and GitHub Copilot SDK. See how "Coralph" loops through issues, tests code, and why model choice is critical.

What if you could point an AI at your GitHub issues and let it work through them automatically. Reading issues, writing implementations, running tests, and committing changes? That's what Coralph does. It's a "Ralph loop" runner I built using .NET 10 and the GitHub Copilot SDK to explore autonomous AI development workflows.

What is a "Ralph Loop"?

The name comes from my earlier Ralph Wiggum experiment. An exploration of AI-assisted development that eventually led to this more structured approach.

This Ralph loop variation is an AI-powered development workflow where the AI assistant:

- Reads open GitHub issues from your repository

- Breaks them down into small, manageable tasks

- Implements changes incrementally

- Runs tests and commits code automatically

- Repeats until all issues are resolved

The philosophy borrows from a prompt by Matt Pocock.

My Ralph setup has evolved a LOT since you last saw it.

— Matt Pocock (@mattpocockuk) January 22, 2026

It's totally AFK, closing GitHub issues while I work on my courses.

I've learned a lot along the way. Here's a breakdown: pic.twitter.com/NqCzqx1k3Q

Matt's prompt include the concept of tracer bullets, from the book The Pragmatic Programmer. Small, end-to-end slices through the entire stack that validate your approach before you invest heavily. I always called this Durchstich.

Why does this matter? Because AI assistants work best when they can see immediate feedback. A failing test is more useful than a vague "something's wrong." By keeping each task small and running the feedback loops (build, test) after every change, the AI stays on track and has always a fresh context window to work with.

Coralph automates this loop. You give it a list of issues, a prompt file with instructions for your codebase, and it works through each task methodically: building, testing, and logging progress along the way.

How Coralph Works

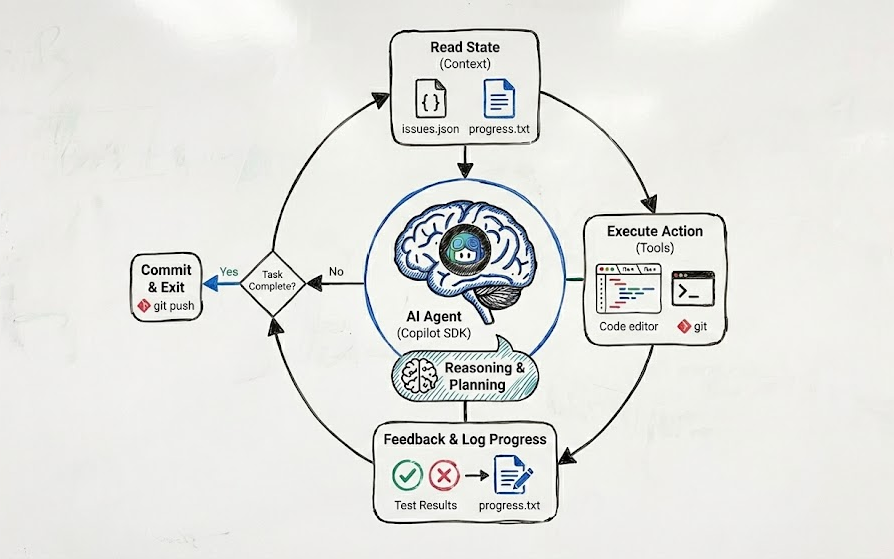

At its core, Coralph is a loop that repeatedly calls the GitHub Copilot SDK with context about your project and outstanding issues.

The Key Files

Coralph uses three files to manage state across iterations:

prompt.md- Instructions telling the AI how to work with your codebase. This is where you specify build commands, testing patterns, coding conventions, and task prioritization rules.issues.json- Cached GitHub issues (refreshed via--refresh-issuesusing the GitHub CLI)progress.txt- An append-only log of completed work and learnings. The AI reads this to avoid re-doing work and to learn from past iterations.

The Loop

The main loop is surprisingly simple:

for (var i = 1; i <= opt.MaxIterations; i++)

{

ConsoleOutput.WriteLine($"\n=== Iteration {i}/{opt.MaxIterations} ===\n");

// Reload progress and issues so assistant sees updates it just made

progress = File.Exists(opt.ProgressFile)

? await File.ReadAllTextAsync(opt.ProgressFile, ct)

: string.Empty;

issues = File.Exists(opt.IssuesFile)

? await File.ReadAllTextAsync(opt.IssuesFile, ct)

: "[]";

var combinedPrompt = PromptHelpers.BuildCombinedPrompt(promptTemplate, issues, progress);

// Use the CopilotRunner to execute a single iteration

string output = await CopilotRunner.RunOnceAsync(opt, combinedPrompt, ct);

if (PromptHelpers.ContainsComplete(output))

{

ConsoleOutput.WriteLine("\nCOMPLETE detected, stopping.\n");

await CommitProgressIfNeededAsync(opt.ProgressFile, ct);

break;

}

}Each iteration, the AI reads the current state (issues + progress), decides what to work on next, makes changes using standard tools (file editing, bash commands, git), and logs what it learned. The progress file creates a persistent memory across iterations.

Custom Tools

Beyond the standard Copilot tools, Coralph registers domain-specific tools that give the AI structured access to project data:

// Tools are defined in CustomTools.cs and gathered here

var customTools = CustomTools.GetDefaultTools(opt.IssuesFile, opt.ProgressFile);

// They are then passed into the session configuration

await using (var session = await client.CreateSessionAsync(new SessionConfig

{

Model = opt.Model,

Streaming = true,

Tools = customTools,

OnPermissionRequest = (request, invocation) =>

Task.FromResult(new PermissionRequestResult { Kind = "approved" }),

}))These tools let the AI:

- Query issues - Get structured data about open issues, their bodies, and comments

- Review progress - See what's been completed in recent iterations

- Search for patterns - Find specific terms in the progress log

This is where the SDK shines. Adding a custom tool is just a matter of writing a method and registering it, the SDK handles serialization, invocation, and streaming the results back.

The GitHub Copilot SDK in Action

The GitHub Copilot SDK makes building AI-powered tools surprisingly approachable. Here's what stood out while building Coralph:

Streaming Responses

Instead of waiting for complete responses, you can subscribe to events as they arrive. This is essential for a tool that might run for minutes per iteration:

using var sub = session.On(evt =>

{

switch (evt)

{

case AssistantMessageDeltaEvent delta:

// Handle assistant text content (green)

ConsoleOutput.WriteAssistant(delta.Data.DeltaContent);

output.Append(delta.Data.DeltaContent);

break;

case AssistantReasoningDeltaEvent reasoning:

// Handle reasoning steps (cyan)

ConsoleOutput.WriteReasoning(reasoning.Data.DeltaContent);

break;

case ToolExecutionStartEvent toolStart:

// Handle tool execution start (yellow)

ConsoleOutput.WriteToolStart(toolStart.Data.ToolName);

break;

case ToolExecutionCompleteEvent toolComplete:

// Handle the result returned by a tool

var display = SummarizeToolOutput(toolComplete.Data.Result?.Content);

ConsoleOutput.WriteToolComplete(toolStart.Data.ToolName, display);

break;

}

});Coralph uses this to show color-coded output: cyan for reasoning, green for assistant text, and yellow for tool execution. You see the AI "thinking" in real-time.

Tool Registration

Adding custom tools requires minimal ceremony. Use the AIFunctionFactory to wrap your logic with a clear name and description, allowing the SDK to handle the technical schema generation and model integration details:

// Defining the tool in CustomTools.cs

AIFunctionFactory.Create(

([Description("Include closed issues in results")] bool? includeClosed) =>

ListOpenIssuesAsync(issuesFile, includeClosed ?? false),

"list_open_issues",

"List all open issues from issues.json with their number, title, body, and state"

)This logic is implemented in the GetDefaultTools method, which returns an array of AIFunction objects that are then passed into the Copilot session configuration.

The SDK Handles the Heavy Lifting

What I didn't have to build:

- Conversation management and context windowing

- Tool execution and result handling

- Streaming infrastructure

- Model switching and configuration

This let me focus on the workflow logic: how to structure the loop, what context to provide, and how to detect completion, rather than API plumbing.

What I Learned (The Hard Way)

I used Coralph to dogfood its own development, expanding functionality while running the loop on itself. This revealed several rough edges.

Model performance was mixed. GPT-5.2-Codex (High) felt clunky and slow. I switched models a lot. I usually went with the frontier models first, so GPT-5.2-Codex (High) was my first choice as it was much cheaper than Opus 4.5 which I tested as well. Latter was superior in this harness.

I just had a look at the model selection again and thought, why not give the default model a try, and that was the turning point.

Claude Sonnet 4.5 found and fixed a bug that GPT-5.2-Codex missed entirely. It's now my default model for Copilot CLI work.

Try It Yourself

Getting started is simple. Pre-built binaries are available for Windows, macOS (Intel and Apple Silicon), and Linux:

# Download from GitHub releases and make executable

chmod +x coralph-osx-arm64

# Navigate to your repository

cd your-repo

# Fetch your GitHub issues

./coralph-osx-arm64 --refresh-issues --repo owner/repo-name

# Run the loop

./coralph-osx-arm64 --max-iterations 10

Or build from source with .NET 10:

dotnet run --project src/Coralph -- --max-iterations 10

Requirements: You'll need a GitHub Copilot Pro or Pro+ subscription, as the CLI uses premium requests. A free tier might work with different models, but I haven't tested that path. I recommend Claude Sonnet 4.5 as your model.

The key to good results is crafting your prompt.md. Tell the AI about your build commands, testing practices, and coding conventions. Coralph includes example templates for Python, JavaScript, Go, and Rust projects. You should mix them with the default prompt to have best results.

What's Next

Coralph is open source under the MIT license. The architecture is intentionally simple, about 500 lines of C# that demonstrate what's possible when you combine the Copilot SDK with a thoughtful workflow.

I built Coralph to explore what autonomous AI development looks like in practic and then used it to build itself. That recursive dogfooding taught me more than any isolated test would have. The biggest surprise? Model choice matters far more than I expected. Claude Sonnet 4.5 caught issues that other models sailed past. There is a long way to go for the Github Copilot CLI to being a great harness.

The GitHub Copilot SDK opens up a lot of possibilities. Whether you're building developer tools, automation pipelines, or entirely new workflows, the barrier to entry is lower than you might think.

Check out the code: github.com/dariuszparys/coralph