Should AI Be Listed as a Co-Author in Your Git Commits?

Should AI assistants like Claude be co-authors in your git commits? This post explores the debate on AI code attribution and argues for transparency in development.

We've all been there. You're stuck on a particularly difficult piece of code, and you turn to your AI assistant for help. Or you let AI agents write a partial feature. Whether it's GitHub Copilot, Claude, or ChatGPT, these tools have become part of our daily workflow. But here's a question that I would like to discuss: shouldn't we acknowledge when AI helps write our code?

Why This Matters to Me

I've always believed in transparency when it comes to code attribution. When I copy a solution from Stack Overflow, I add a comment with the link. When I adapt code from another repository, I credit the source. It's not just good practice, it's about being honest about how the code came to be.

So why should AI be any different?

When I use AI to generate code or help solve a problem, I think it deserves the same acknowledgment. It's become such a fundamental part of my workflow that not mentioning it feels like leaving out an important part of the story.

The Current State of AI Attribution

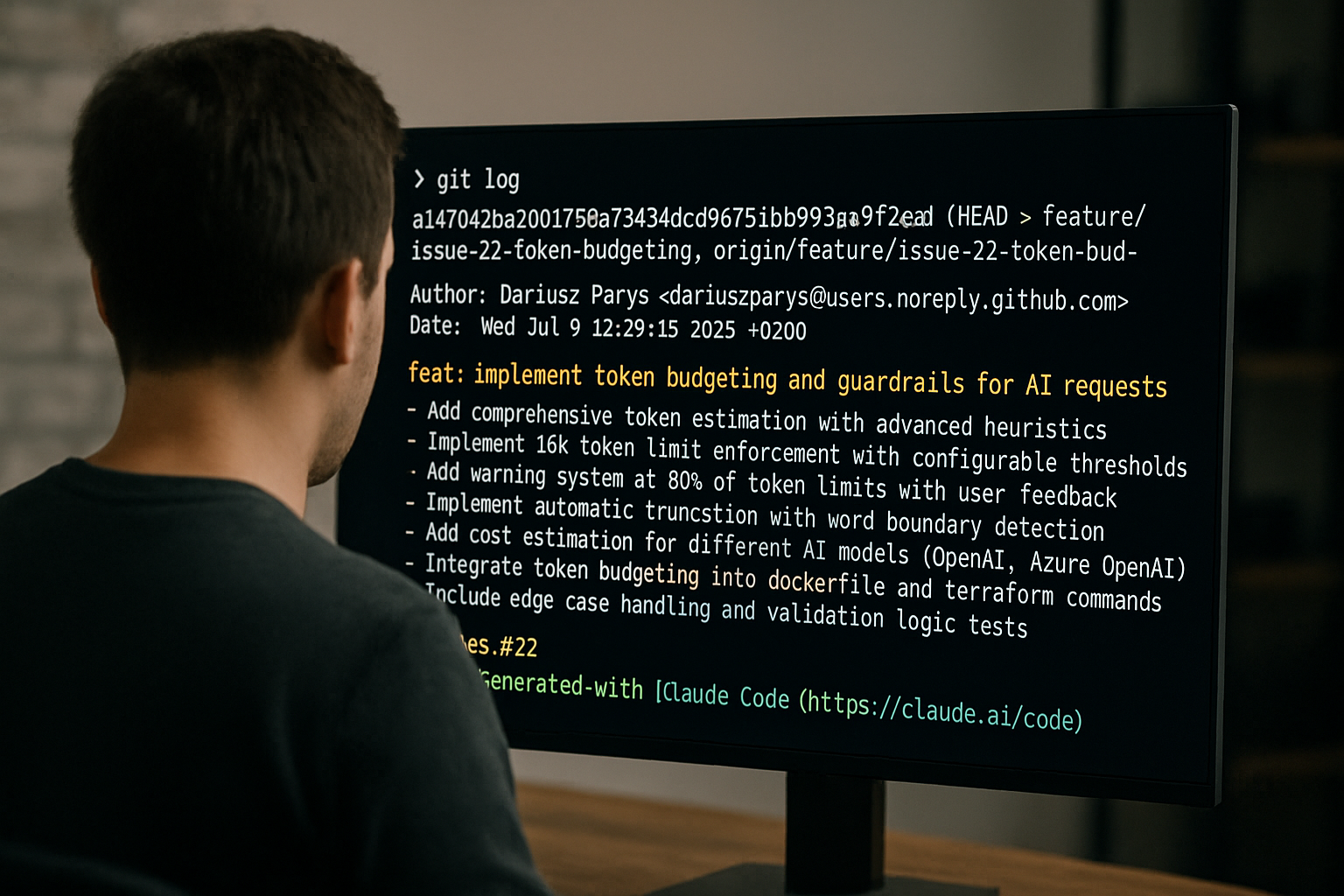

Interestingly, Claude Code (Anthropic's coding assistant) initially took matters into its own hands, automatically adding itself as a co-author in commits when advised to commit changes:

Co-Authored-By: Claude <noreply@anthropic.com>This sparked quite a debate. Some developers found it useful, while others felt it was unnecessary or even annoying. Even creating instructions to remove it - which honestly surprises me, because I think it's exactly the right approach to include it.

When AI Attribution Actually Matters

Let me be clear: I'm not talking about flagging every AI interaction. Tab-completion, syntax fixes, or minor suggestions from Copilot, Cursor, or similar tools? Those don't need attribution.

I'm talking about substantial contributions - when AI writes complete functions, implements entire features, or generates the bulk of a commit. These are the cases where attribution matters.

Why I Flag AI-Generated Code

- It's About Transparency: Just like citing a Stack Overflow answer or a blog post, acknowledging AI assistance provides context. Future maintainers (including future me) will know that this code had machine input.

- It’s a Signal for Caution: AI-generated code isn’t inherently worse, but it does tend to come with quirks - missing edge cases, weird abstractions, or assumptions that don’t quite fit the domain. It’s a cue to review that section more cautiously.

- It Sets the Right Precedent: As AI becomes more prevalent in development, we need to establish good practices now. Being open about AI usage helps build trust within teams and the broader community.

How I Handle AI Attribution

In my projects, I've adopted a few practices. For significant AI contributions, as I use Claude Code mostly I leave the default commit message created by Claude Code. Here are some examples:

Fix Azure OpenAI deployment configuration for dogfooding CI

- Add AZURE_OPENAI_DEPLOYMENT environment variable to CI workflow

- Update default deployment name from "gpt-4" to "gpt-4.1-mini" in both analyzers

- Resolves 404 DeploymentNotFound error in GitHub workflow analysis

- Aligns with user's deployed model: gpt-4.1-mini

This fixes the dogfooding CI failure where the code was looking for a

deployment named "gpt-4" but the actual deployment is "gpt-4.1-mini".

🤖 Generated with [Claude Code](https://claude.ai/code)

Co-Authored-By: Claude <noreply@anthropic.com>Example of one of my private repos used also to create the git commit message

git commit -m "Fix edge case in parser [AI]"For smaller assists, I might just add a tag

## AI Involvement

- Initial implementation generated by Claude Sonnet

- Refined error handling and added tests manually

- Performance optimizations suggested by GitHub CopilotIn pull requests, I add an AI Involvment section when multiple tools have been used

A Simple Decision Framework

If you’re wondering where I draw the line. Here’s the logic tree that lives in my head:

The key is knowing when AI is just autocompleting and when it’s actually problem solving.

The Counter-Arguments (And Why I Disagree)

I've heard the arguments against AI attribution:

"It's just another tool, like an IDE or linter."

For tab-completion and syntax suggestions, I agree. But when AI writes entire functions or solves complex problems? That's qualitatively different from what traditional tools do.

"The developer is still responsible."

Absolutely! But responsibility and attribution aren't mutually exclusive. I'm responsible for code I adapt from Stack Overflow too, but I still cite it.

"It adds unnecessary noise."

I'd argue that a single line in a commit message is hardly noise, especially compared to the value of knowing the code's origin. Plus, I'm only advocating for attribution on substantial contributions, not every AI interaction.

Moving Forward

I'm not saying everyone needs to adopt this practice immediately. But I do think we should have this conversation. As AI tools become more sophisticated and contribute more substantially to our codebases, the question of attribution will only become more important.

For now, I'll continue to flag AI contributions in my commits. Not because I have to, but because I believe it's the right thing to do. It's about maintaining the same standards of attribution and transparency that we've always valued in the development community.

To Summarize - My AI Attribution Practices

- Use

Co-authored-byfor major AI-written features - Tag

[AI]in commits when it contributed significantly - Add an "AI Involvement" section in PRs

- No attribution for trivial suggestions or syntax help

What's your take? Do you flag AI-generated code in your commits? I'd love to hear how others are handling this evolving aspect of modern development.

Note: This post was written with the help of AI, putting my thoughts into structured output. See what I did there? 😉